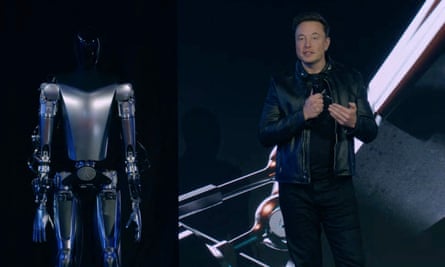

When Elon Musk introduced the team behind his new artificial intelligence company xAI last month, the billionaire entrepreneur took a question from the rightwing media activist Alex Lorusso. ChatGPT had begun “editorializing the truth” by giving “weird answers like that there are more than two genders”, Lorusso posited. Was that a driver behind Musk’s decision to launch xAI, he wondered.

“I do think there is significant danger in training AI to be politically correct, or in other words training AI to not say what it actually thinks is true,” Musk replied. His own company’s AI on the other hand, would be “maximally true” he had said earlier in the presentation.

It was a common refrain from Musk, one of the world’s richest people, CEO of Tesla and owner of the platform formerly known as Twitter.

“The danger of training AI to be woke – in other words, lie – is deadly,” Musk tweeted last December in a reply to Sam Altman, the OpenAI founder.

Musk’s relationship with AI is complicated. He has warned about the existential threat of AI for around a decade and recently signed an open letter airing concerns it would destroy humanity, though he has simultaneously worked to advance the technology’s development. He was an early investor and board member of OpenAI, and has said his new AI company’s goal is “to understand the true nature of the universe”.

But his critique of currently dominant AI models as “too woke”, has added to a larger rightwing rallying cry that has emerged since the boom in publicly available generative AI tools throughout this year. As billions of dollars pour into the arms race to create ever-more advanced artificial intelligence, generative AI has also become one of the latest battlefronts in the culture war, threatening to shape how the technology is operated and regulated at a critical time in its development.

AI enters the culture war

Republican politicians have railed against the large AI companies in Congress and on the campaign trial. At a campaign rally in Iowa late last month, Ron DeSantis, Republican presidential candidate and Florida governor, warned that big AI companies used training data that was “more woke” and contained a political agenda.

Conservative activists such as Christopher Rufo – who is generally credited with stirring the right’s moral panic around critical race theory being taught in schools – warned their followers on social media that “woke AI” was an urgent threat. Major conservative publications like Fox News and The National Review amplified those fears, with the latter arguing that ChatGPT had succumbed to “woke ideology”.

And rightwing activists have slammed an executive order by Joe Biden that concerned equity in AI development as an authoritarian action to promote “woke AI”. One fellow at the Manhattan Institute, a conservative thinktank, described the order as part of an “ideological and social cancer”.

The right’s backlash against generative AI contains echoes over those same figures’ pushback against content moderation policies on social media platforms. Much like those policies, many of the safeguards in place on AI models like ChatGPT are intended to prevent the use of the technology for the promotion of hate speech, disinformation or political propaganda. But the right has framed those content moderation decisions as a plot by big tech and liberal activists to silence conservatives.

Meanwhile, experts say, their critiques of AI attribute too much agency to generative AI models and assumes that services can hold viewpoints as if they were sentient beings.

“All generative AI does is remix and regurgitate stuff in its source material,” said Meredith Broussard, a professor at New York University and author of the book More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech. “It’s not magic.”

Lost entirely in the discussions among rightwing critics, experts say, is the way AI systems tend to exacerbate existing inequalities and harm marginalized groups. Text-to-image models like Stable Diffusion create images that tend to amplify stereotypes around race and gender. An investigation from the Markup found algorithms designed to evaluate mortgage applications ended up denying applicants of color at a 40 to 80% higher rate than their white equivalents. Racial bias in facial recognition technology has contributed to wrongful arrests, while states expand the use of AI-assisted surveillance.

A culture war with consequences

Still, the rightwing critiques of AI leaders are already having consequences. Coming amid a new push by Republicans against academics and officials who monitor disinformation, and a lawsuit by Musk against the anti-hate speech organization Center for Countering Digital Hate, whose work the billionaire says has resulted in tens of millions of dollars in lost revenue on Twitter, the “anti-woke AI” campaign is putting pressure on AI companies to appear politically neutral.

After the initial wave of backlash from conservatives, OpenAI published a blog in February that appeared aimed at appeasing critics across the political spectrum and vowed to invest resources to “reduce both glaring and subtle biases in how ChatGPT responds to different inputs”.

And when speaking with the podcast host Lex Fridman, who has become popular among anti-woke cultural crusaders like Jordan Peterson and tech entrepreneurs like Musk, in March, ChatGPT founder Sam Altman said: “I think it was too biased and will always be. There will be no one version of GPT that everyone agrees is unbiased.”

Rightwing activists have also made several rudimentary attempts at launching their own “anti-woke” AI. The CEO of Gab, a social media platform favored by white nationalists and other members of the far right, announced earlier this year that his site was launching its own AI service. “Christians must enter the AI arms race,” Andrew Torba proclaimed, accusing existing models of having a “satanic worldview”. A chatbot on the platform Discord called “BasedGPT” was trained on Facebook’s leaked large language model, but its output was often factually inaccurate or nonsensical and unable to answer basic questions.

Those previous attempts have failed to gain mainstream traction.

It remains to be seen where xAI is headed. The company says on its website that its goal is to “understand the true nature of the universe” and has recruited an all-male staff of researchers from companies such as OpenAI and institutions like the University of Toronto. It’s unclear what ethical principles it will operate under, beyond Musk’s vows that it will be “maximally true”. The company has signed up the AI researcher Dan Hendrycks from the Center for AI Safety as an adviser. Hendrycks has previously warned about the long-term risks AI poses to humanity, a fear Musk has said he shares and which aligns with the longtermist beliefs he has frequently endorsed.

Musk, xAI, Hendrycks and the Center for AI Safety could not be reached for comment.

Despite the futuristic discussions from figures such as Musk around AI models learning biases as they become sentient and potentially omnipotent forces, some researchers believe that the simpler answer to why these models don’t behave as people want them to is that they are janky, prone to error and reflective of current political polarization. Generative AI, which is trained on human-generated datasets and uses that material to produce outputs, is instead more of a mirror that reflects the fractious state of online content.

“The internet is very wonderful and also very toxic,” Broussard said. “Nobody’s happy with the stuff that’s out there on the internet, so I don’t know why they’d be happy with the stuff coming out of generative AI.”