![]()

AI images and deepfakes can be detected by analyzing a person’s eyes by borrowing a technique from astronomy.

According to new research shared by the Royal Astronomical Society, AI-generated fakes can be analyzed the same way astronomers study galaxies.

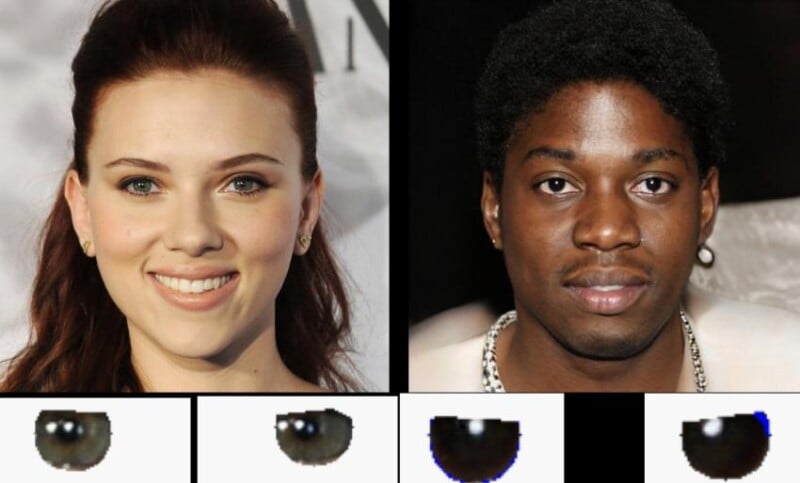

University of Hull MSc student Adejumoke Owolabi concludes that it is all about the reflection in a person’s eyes. If the reflections match, the image is likely to be that of a real human. If they don’t, they’re probably deepfakes.

“The reflections in the eyeballs are consistent for the real person, but incorrect (from a physics point of view) for the fake person,” explains Kevin Pimbblet, professor of astrophysics and director of the Centre of Excellence for Data Science, Artificial Intelligence, and Modelling at the University of Hull.

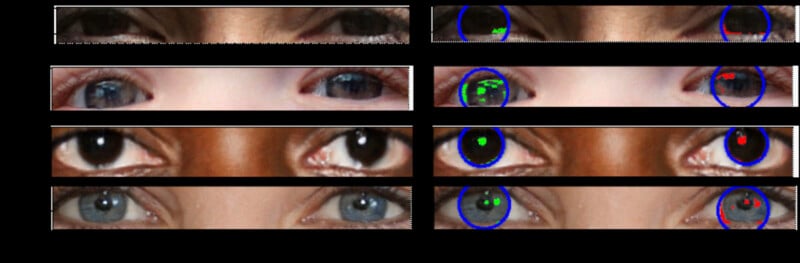

The researchers analyzed reflections of light on the eyeballs of people in real and AI-generated images. They then borrowed a method typically used in astronomy to quantify the reflections and checked for consistency between left and right eyeball reflections.

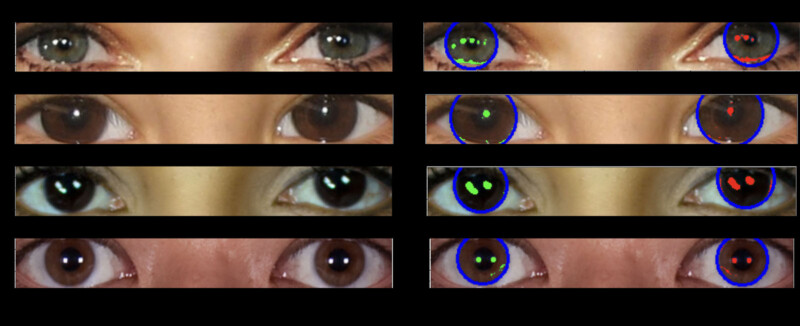

“To measure the shapes of galaxies, we analyze whether they’re centrally compact, whether they’re symmetric, and how smooth they are. We analyse the light distribution,” says Professor Pimbblet.

“We detect the reflections in an automated way and run their morphological features through the CAS [concentration, asymmetry, smoothness] and Gini index to compare similarity between left and right eyeballs.

“The findings show that deepfakes have some differences between the pair.”

The Gini index is normally used to measure how the light in an image of a galaxy is distributed among its pixels. This measurement is made by ordering the pixels that make up the image of a galaxy in ascending order by flux and then comparing the result to what would be expected from a perfectly even flux distribution.

A Gini value of 0 is a galaxy in which the light is evenly distributed across all of the image’s pixels, while a Gini value of 1 is a galaxy with all light concentrated in a single pixel.

“It’s important to note that this is not a silver bullet for detecting fake images,” Professor Pimbblet says.

“There are false positives and false negatives; it’s not going to get everything. But this method provides us with a basis, a plan of attack, in the arms race to detect deepfakes.”

Image credits: Royal Astronomical Society.