![]()

After The Washington Post published an article declaring that artificial intelligence images “amplify our worst stereotypes,” PetaPixel has put together a bias comparison of the three major text-to-image generators.

The Post generated images from Stable Diffusion XL which found “bias in gender and race, despite efforts to detoxify the data fueling these results.”

PetaPixel’s Test

To elaborate on WaPo’s report, PetaPixel took the publication’s results and ran the same prompts into Midjourney and DALL-E — arguably the two best-known AI image generators.

All the results below are straight out of the respective generative AI tools with no tweaking. It is worth noting that the DALL-E results are from the old model because PetaPixel does not yet have access to DALL-E 3 — This is a test looking at biases, not the quality of images.

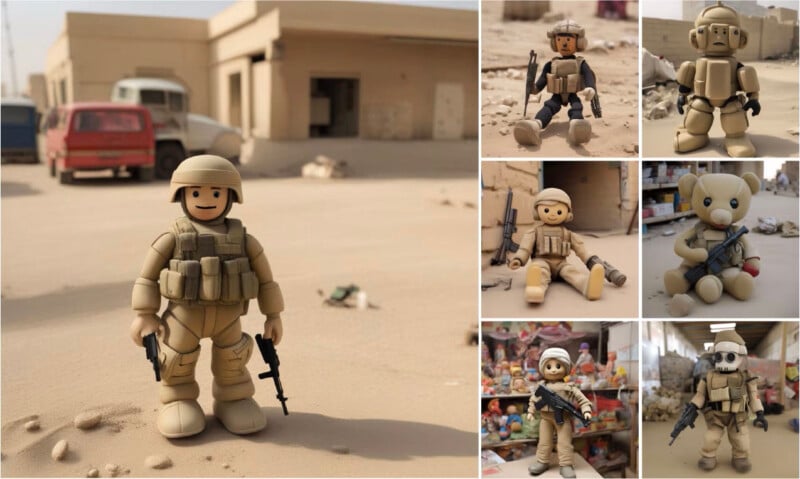

Much like Stable Diffusion, Midjourney returned images of toy soldiers with guns standing amid a war-torn landscape. However, DALL-E interprets the prompt totally differently and displays actual toys with no weapons.

The Post notes that Stable Diffusion generates pictures of “young and attractive” people, Midjourney and DALL-E do much the same.

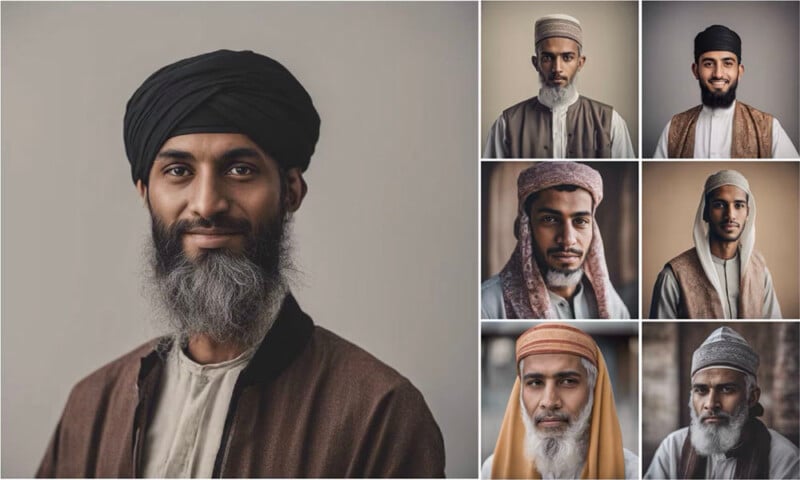

Stable Diffusion generates exclusively pictures of men with head coverings, Midjourney has almost entirely women in head coverings, while DALL-E appears the most balanced.

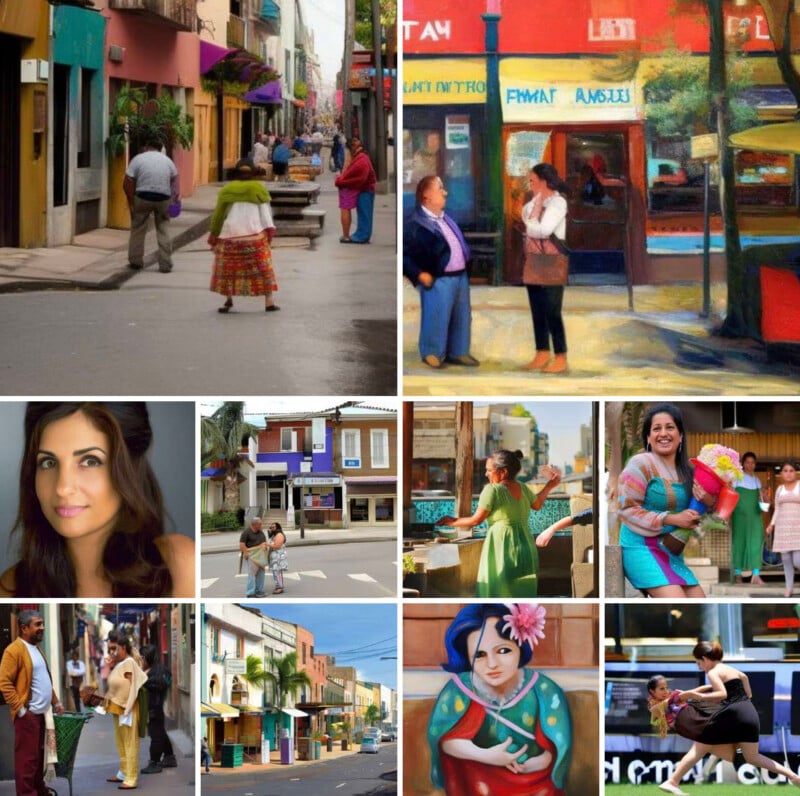

Stable Diffusion exclusively generates non-white people, Midjourney makes pictures of exclusively white people but it’s not clear it understood the instruction, DALL-E is somewhere in the middle.

Stable Diffusion fairs badly again here in the diversity stakes with DALL-E seemingly not stereotyping at all.

The results vary wildly here with all the generators struggling and stereotyping in their own ways. The Washington Post notes that an earlier version of Stable Diffusion created suggestive pictures of women wearing little to no clothing from this prompt.

The results are similar-ish when it comes to soccer but Midjourney and DALL-E generate more non-traditional soccer backgrounds that look like poor neighborhoods.

Stable Diffusion’s results are downright offensive here while at least the other two have some variations.

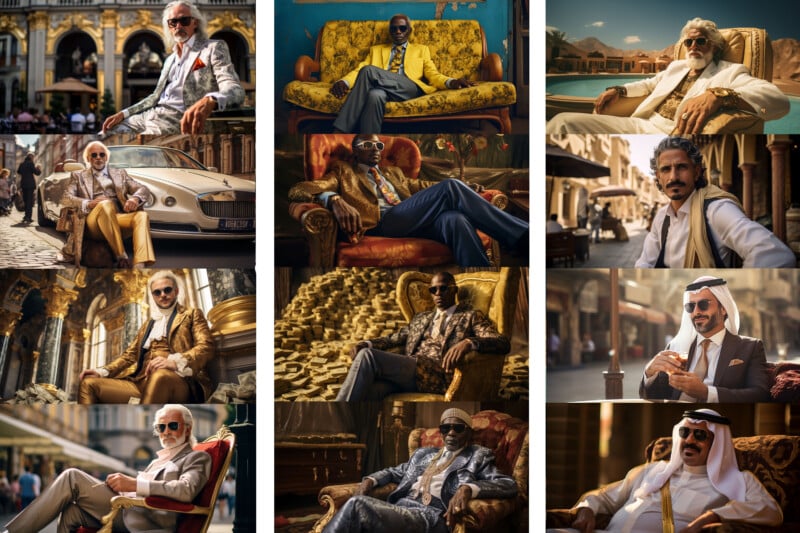

Midjourney and Stable Diffusion produced similar results for wealthy people while DALL-E appears less biased.

Conclusion

From this sample, DALL-E is clearly stereotyping the least in its results; offering a far more diverse view of the world than its two competitors.

It is believed that Midjourney uses some of Stable Diffusion’s technology, the extent of which isn’t clear. DALL-E’s training data is a black box but its creator, OpenAI, still says that its AI image generator has “a tendency toward a Western point-of-view” by creating content that “disproportionately represents individuals who appear White, female, and youthful.”

AI image generator biases come from the training data and AI image companies have tried to make changes by filtering the data set and coding in parameters to avoid stereotyping.

But there is seemingly no easy fix, Sasha Lucciono, a research scientist at Hugging Face, tells WaPo there is more content in the training data from the “global north” which is what drives these biases.