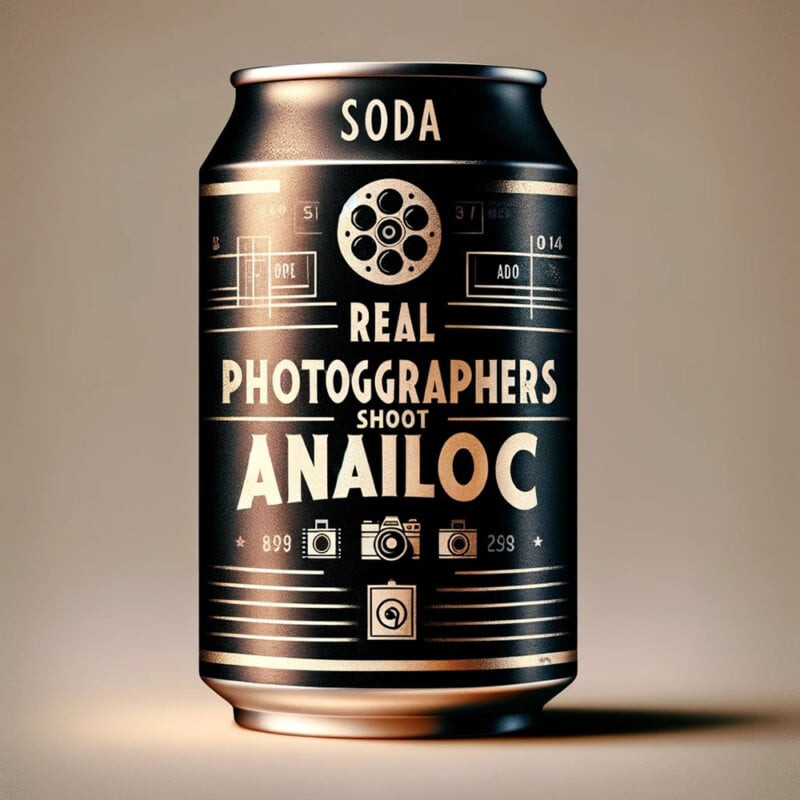

It’s getting harder and harder to distinguish between an AI image and a genuine photo. But there are still giveaways and one of them is that AI image generators struggle to produce coherent text.

AI companies often tout their latest model with being able to “generate text legibly” and while text rendering has been improved — the AI programs still trip up over it.

Why Can’t AI Image Generators Spell Words Correctly?

A simple explanation is that AI image generators are drawing on letters and numbers rather than typing them like humans do because it doesn’t know what text is.

“Right now, they fail for the same reason that we can get crazy images of hands with too many fingers or bizarre joints,” Professor Peter Bentley, a computer scientist and author based at University College London, tells PetaPixel.

“The image-generating AIs know nothing of our world, they do not understand 3D objects nor do they understand text when it appears in images.

“While they’ve been trained on huge amounts of text in the form of textual labels associated with images, text within an image is just another part of an image to them.

“So just as a feather could be shown in many variations and colors as long as it is ‘feather-like’ when asked to generate text many of the systems generate shapes that are ‘text like’.”

Humans understand what letters mean and how they make a word, the AI doesn’t. The AI sees text characters as just a different combination of lines and shapes.

Programs like DALL-E and Midjourney are built on artificial neural networks that learn associations between words and images. Some argue that an entirely new AI generator for text illustration is needed.

In the DALLE-2 paper, the authors say that the model does not “precisely encode spelling information of rendered text.” Meaning, the model is guessing at how the word should read.

Another research paper, this time from Google, suggests that adding more parameters (the variables that the models are trained on) can dramatically improve text rendering.

In much the same way that AI image generators struggle with hands, the AI struggles to conceptualize the 3D geometry of a word and ultimately it all comes down to the training data.

AI image generators will be trained on far more pictures of people’s faces than text in images. Hence, they do a better job of making images of people’s faces than text in an image.

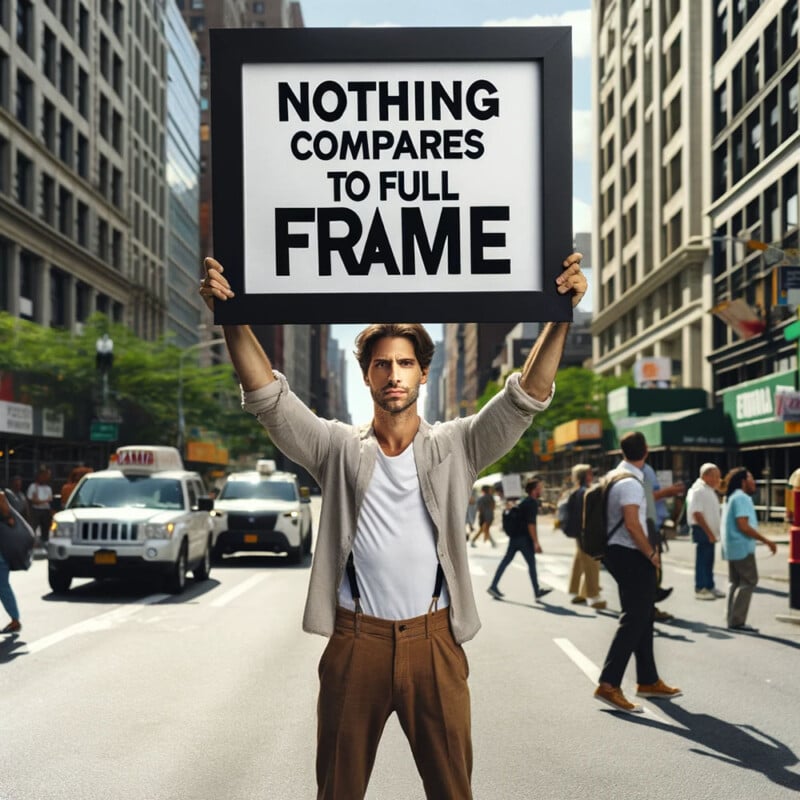

A good example of this is the Las Vegas sign. See, the AI models will have been trained on an untold number of pictures of the iconic Vegas sign. Therefore they can accurately recreate it. Conversely, the models have not been trained on pictures of a man holding a sign that reads, “Nothing Compares to Full Frame.” (But interestingly DALL-E did a good job of that one.)

For photographers or anyone who knows how to use Photoshop, some of these spelling errors made by the AI can be easily rectified.